How to talk to machines

On Google’s viral prompt engineering paper, new literacies, and the shrinking space between design and code

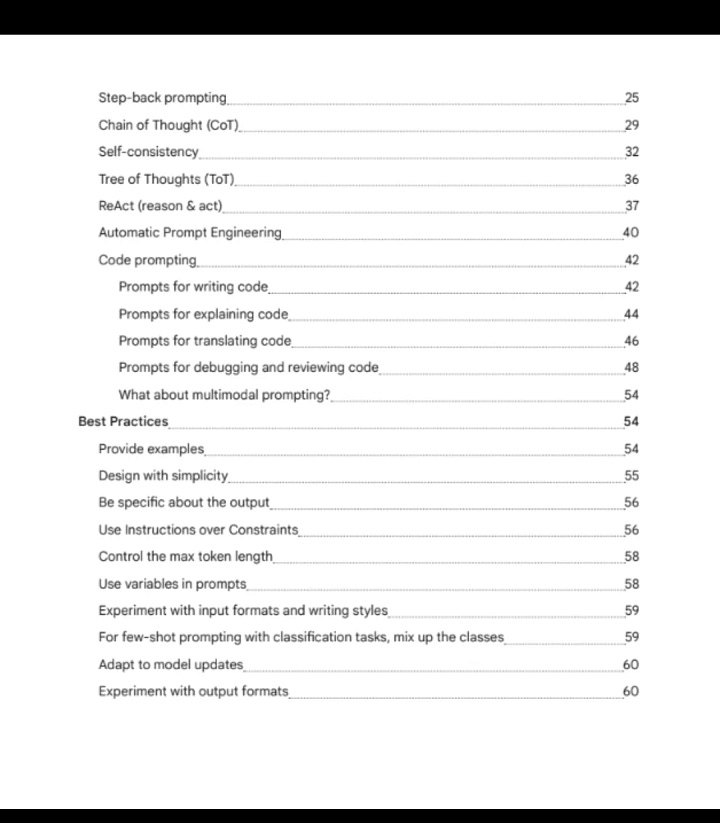

Google recently released a 69-page whitepaper on prompt engineering, a surprisingly practical guide for working with large language models (LLMs). Authored by Lee Boonstra, the paper outlines best practices for structuring inputs, from zero-shot and role-based prompting to more advanced techniques like Chain-of-Thought and ReAct.

The techniques are helpful, but perhaps more telling is what the paper represents: Prompting is no longer a fringe skill. It’s the new interface.

Prompting has quickly become the connective layer between human intent and machine behavior. It’s the UI, the command line, the creative brief, and the query language—written in natural language but requiring an unnatural level of specificity.

“Computation is not something you can fully grasp after training in a ‘learn to code’ boot camp,” writes John Maeda in his 2019 book, How to Speak Machine. “It’s more like a foreign country... knowing the language is not enough.” Prompting is quickly becoming the language we use to navigate that world.

Prompting as user interface design

At its core, prompting isn’t just a way to get an answer—it’s how we design what an AI does. The structure of a prompt shapes the model’s behavior. Tone, format, and framing all influence output. It’s interaction design, expressed through language.

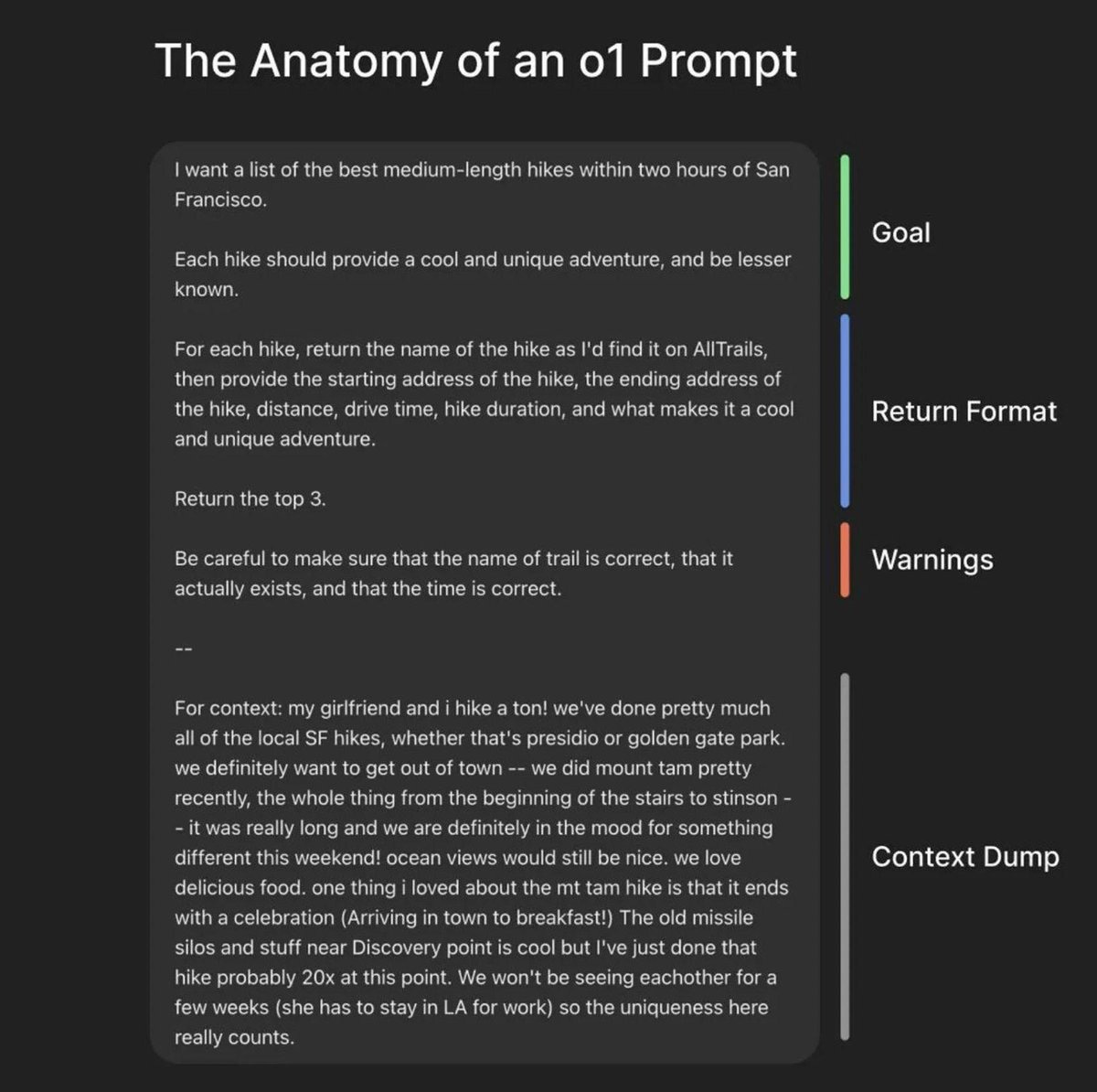

Greg Brockman, president of OpenAI, retweeted Ben Hylak’s breakdown of a strong prompt into four parts: goal, return format, warnings, and context. It reads more like UX documentation than programming. Good prompting, like good product design, is less about cleverness and more about clarity.

Google’s whitepaper echoes this. Use simple language. Provide examples. Define output formats. Specify constraints like token limits. And, where appropriate, assign the model a role: a copywriter, a doctor, a customer support agent.

Prompting as a form of literacy

Prompting well means understanding how machines process information and how humans misunderstand them. Large language models are literal, probabilistic systems that lack memory, context, and intuition. They’re tireless and scalable—but also rigid and easily confused.

“You don’t have to use exact pedantic language about basic human concepts,” says Sean Thielen-Esparza. “But with LLMs, you do—and you generally have to give them examples.” Prompting becomes an act of translation: making your reasoning legible to something that lacks common sense.

More than a technical skill, prompting requires a kind of cognitive scaffolding. You have to anticipate gaps in understanding, define ambiguous terms, and articulate what you’re trying to accomplish with precision. It’s metacognition—thinking about how you think. John calls this computational thinking: deconstructing a problem, identifying patterns, and structuring instructions that match how machines interpret meaning.

It’s not so different from writing a design brief or running a workshop. Prompting slows you down, clarifies your intent, and forces you to confront what you’re really asking.

Prompting as infrastructure

As AI tools become more integrated into workflows, prompting is being formalized and systematized. Tools like Deep Research, O1 Pro, Cursor, and Claude Code scaffold or auto-generate prompts based on user goals.

@BuccoCapital describes using O1 Pro to create “3–5 page prompts that generate 60–100 page, very thorough reports” by combining context, structured formats, and a few high-level questions. These aren’t just prompts, they’re pipelines.

Prompting is becoming infrastructure. You may not always write the prompt yourself, but you’ll likely design the system that does. Just as design systems abstract complexity in visual design, prompt systems do the same for language by layering templates, logic, and context over raw inputs.

Prompting as a stopgap

Prompting, in its current form, exists in a purgatorial state. “Writing text back and forth with an LLM is like we’re all the way back to the era of command terminals,” says Andrej Karpathy. “The correct output is a lot closer to custom web apps—spatial, multimodal, interactive.”

“Prompting isn’t the future. It’s a stopgap,” says Levi Brooks. “UX for AI needs to be reimagined from scratch.” Until then, it’s all we have.

But transitional doesn’t mean disposable. Prompting reveals how the system works—what it knows, assumes, forgets. It collapses the distance between idea and implementation, while exposing all the fuzziness we tend to skip over in the middle. The whitepaper not only codify techniques, but documents a shift: from hacks to heuristics. From experimentation to fluency.

Prompting as a mirror

More than a mechanism, prompting is a reflection of how we think. Good prompts aren’t just functional, they’re introspective. They require clarity, structure, and refinement. They’re not just for models. They’re for us.

Nan Yu puts it well: “Prompting is a good lesson in interpersonal communication. What do you know that the model doesn’t? What unspoken constraints are you assuming? What have you already tried that didn’t work?” Designers already ask these questions. Prompting just makes the thinking more visible.

Make it usable

If prompting is here to stay—at least for now—it helps to be specific. Google’s whitepaper offers plenty of practical guidance. A few standouts:

Be direct. Avoid ambiguity. Use declarative language.

Specify the format. Lists, tables, summaries, JSON—structure improves performance.

Use examples. One-shot or few-shot examples often outperform abstract instructions.

Set a role. “You are a senior UX researcher…” helps the model narrow its scope.

Iterate. Prompting is a loop. Test, refine, repeat.

Each prompt is a prototype. Not just a request, but a sketch of your thinking.

Prompting well is less about memorizing techniques and more about adopting a mindset. It’s the kind of fluency John advocates—where code isn’t the barrier and language isn’t the solution, but both are part of a shared grammar of systems and intent.

You don’t need to master every method in the whitepaper. But if you’re working with AI, you’re already prompting. The better you get at it, the more clearly your thinking—and your outputs—will take shape.

—Carly