Should designers train models?

Justin Ouellette on interface as truth-telling, designing in the fog, and why he’s not afraid to be left behind

People are asking a lot of designers lately: Designers should be product engineers. Designers should do sales. Designers should be founders. And, yes—once and for all—designers should code. But when Russ Maschmeyer tweeted: “Designers should learn to train models,” I thought: maybe we’d gone too far.

But the thread was sincere, andeven included resources. So by the time Justin Ouellette entered the discourse, I was listening.

The sentiment echoes a recent conversation I had with Jessica Hische (also Russ’s partner), but with a different flavor: While both agree that designers need to understand the tools they use, Justin is most concerned with how that understanding affects the design of consumer products, especially those that embed AI.

AI as a design challenge

Justin sees AI as a design problem. That framing changes how he engages with it: not as a shiny new feature to bolt on, but as a material with real constraints. “The more I see it as a design need,” he says, “the more interesting it becomes.”

He’s neither evangelist nor abstainer. He’s skeptical, but curious. Cautious, but hands-on. “I’m critical of it because I spend a lot of time thinking about it,” he tells me. “My irritation has everything to do with how often they’re deployed in thoughtless ways and how rapidly it’s plunging us into unknown territory.”

Justin’s been in the game long enough to see a few hype cycles come and go. He built Muxtape before streaming had a business model, shaped the frontend at Vimeo, led Special Projects at Tumblr, and worked in the New York Times R&D Lab before “futures-thinking” had a name. These days, he’s designing interfaces at Output, a new startup focused on workplace communication—and, with some reluctance, integrating AI. He also recently became a parent, which he notes hasn’t made it easier to keep up with the discourse. “I feel lucky if I have time to catch up with [his wife] Bri at the end of the day, much less the conversation in tech.”

Know the tool, even if you don’t use it

Justin doesn’t believe every designer needs to train a model from scratch, but he does believe we need to understand how they work. “It’s malpractice not to explore what they’re good at,” he says. “You need to understand their strengths and limitations.” If we don’t, we risk misusing the tools or worse, misrepresenting them.

He’s tried AI code editors that promised pair programming with a ghost, but the reality didn’t hold up. “I work with large, mature codebases, the kind with history and edge cases,” he explains. “I wanted a tool that could sit beside me, not hallucinate at me.” Still, he values the attempt.

And while Justin has deep engineering chops, he’s not precious about them. “Writing code is no substitute for using design tools meant for designing UI,” he says. He’s more excited by expressive tools like Rive, Cavalry, and Play that give designers fluid, cross-platform control.

What AI is good for (and what it’s not)

Justin wants us to think more critically about what AI is actually good for. “It’s good for chopping stuff up, pattern recognition—the kind of thing that works even if it’s wrong 20% of the time.”

In other words: fuzziness is a feature. He points to astronomy, medical imaging, and data analysis as legitimate use cases, domains where ambiguity is expected and patterns matter more than precision. “I love hearing stories about software that detects breast cancer five years early. That’s awesome. That’s what you want to see.”

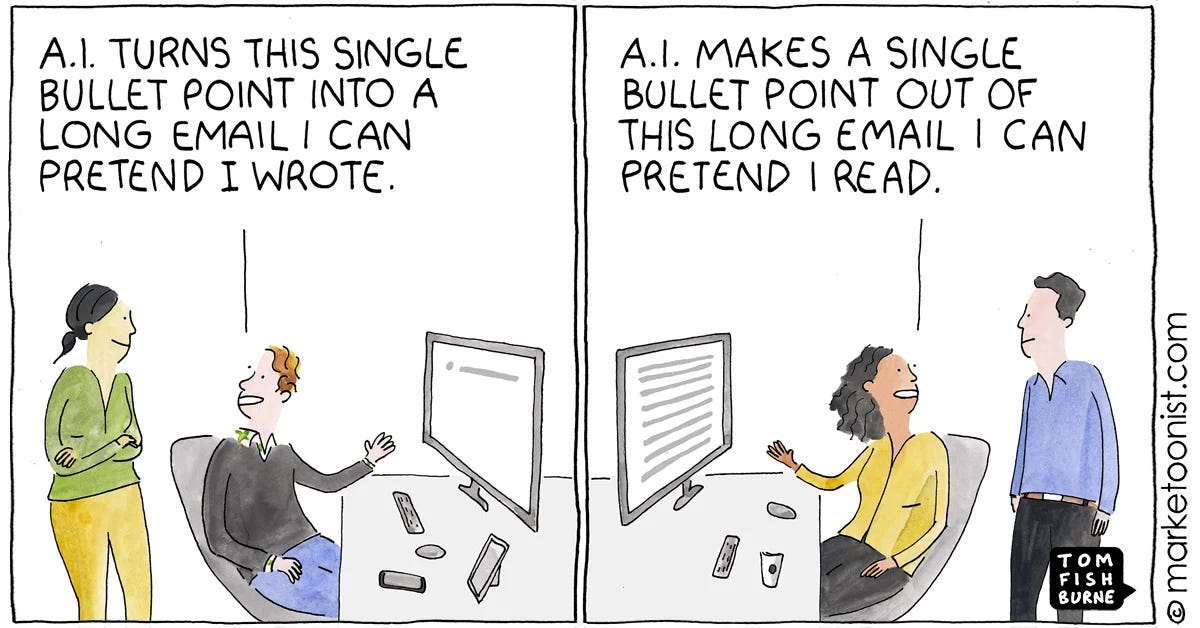

He’s also interested in how AI might reduce the fire hose of modern communication. If done right, it could help us sift signal from noise, making space for more thoughtful interaction. At Output, the AI integrations he’s designing are subtle, sometimes invisible. “We want to put it to use where it is useful. It’s no more complicated than that.”

But not all problems are pattern problems. Too often, we apply AI to situations that demand clarity, context, or care and then act surprised when it falls short. “The danger,” he warns, “is when we pretend these tools are more trustworthy than they are.”

Interfaces should reveal the seams

Justin’s strongest critique is aimed not at the models themselves, but at the metaphors we build around them. He’s especially wary of chatbot-style summaries that flatten nuance and invent facts. He recalls an interaction where ChatGPT completely fabricated a medical test: “It just made something up. That’s unacceptable.”

What’s missing, he says, is transparency. “The interface should tell you some truth.” He’s “really anxious to see more user interfaces that reveal and acknowledge some truths about AI and its process and fuzziness.” He wants tools that surface uncertainty, signal approximation, and show their seams.

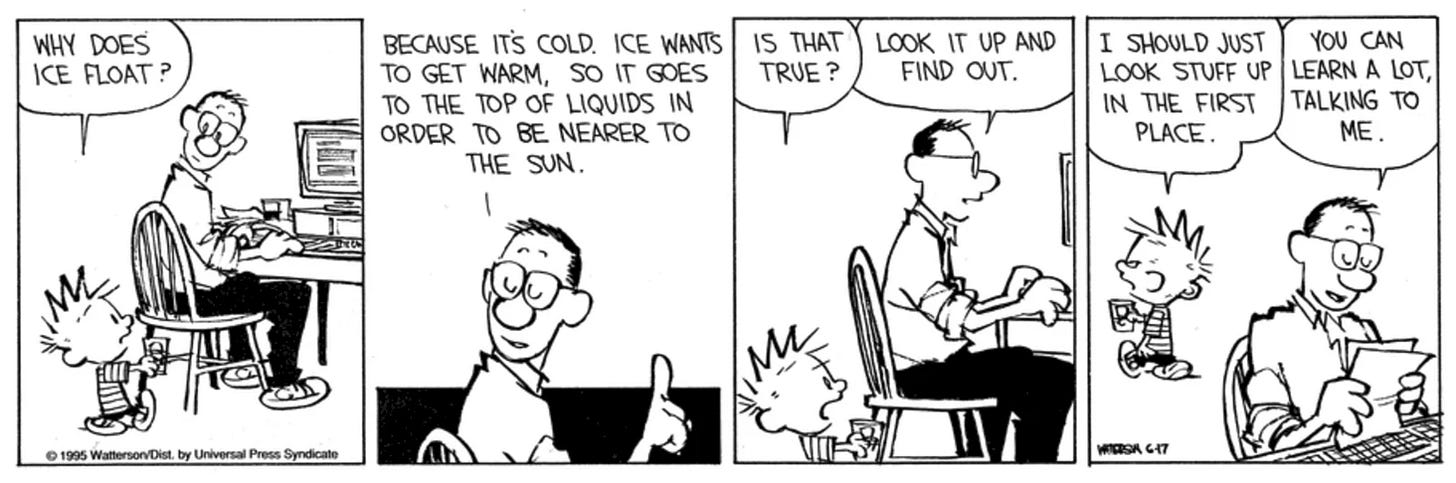

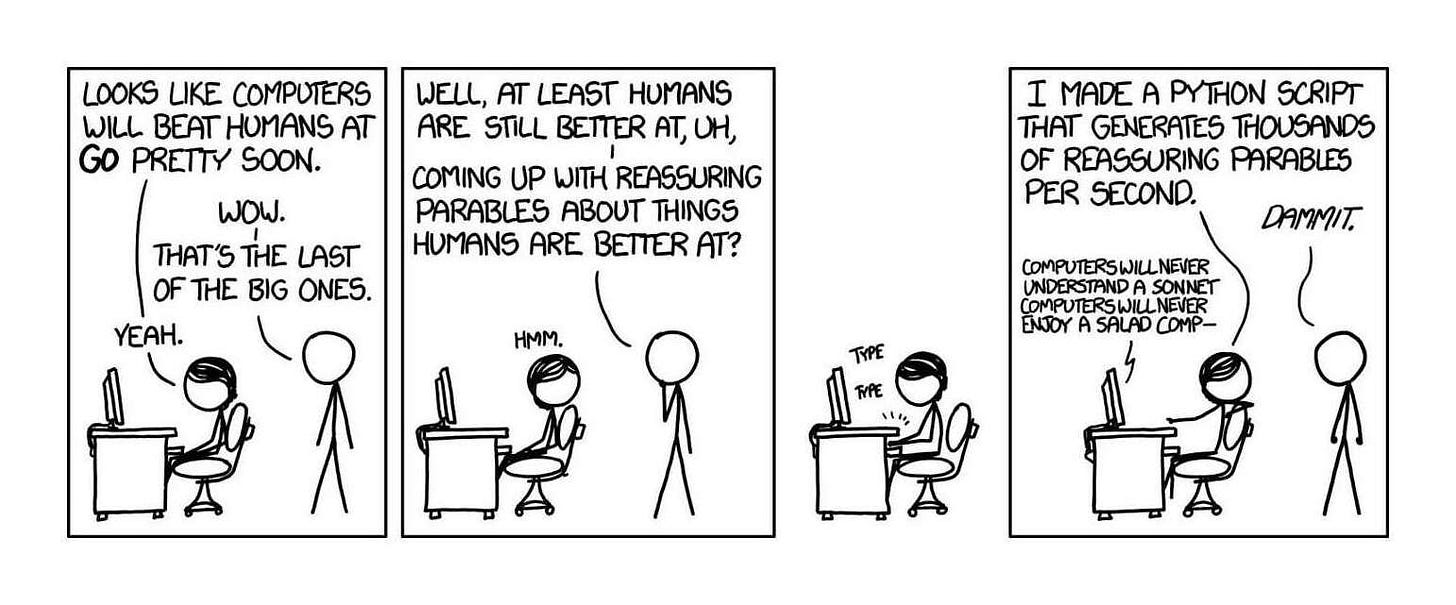

Justin keeps coming back to two references: 1.) this Calvin & Hobbes comic, and 2.) Herbert Dreyfus’s book What Computers Can’t Do. Herbert, writing in the ‘70s, argued that AI will always hit a wall. Machines only ever get a model of the world; people have to face the world’s mess and unpredictability every second. “I won’t claim to know much about philosophy,” says Justin. “But it rings true to me that a question like that can’t be resolved with math or empirical measurement alone.”

Making things that last

Whether he’s using Xcode or Blender or literal wood, Justin is trying to build things that last. He tells a story about a colleague at Vimeo who once created a calendar where each square represented a week of life, calculated up to the average lifespan of 79 years. Seeing how few weeks were left made an impression: “I’d rather make a few beautiful things before my calendar runs out.”

He’s not racing to stay relevant. He’s working deliberately with care, not speed. That care extends to his community. He names Russ, Jessica, Reggie James, Cameron Koczon as a few people he sees as building slowly and thoughtfully. “It’s a small room,” he says, “but that’s who should be building the next generation of tools—not to scale, not to impress, just to understand.”

Between Output and parenting, Justin’s days are full, but that, too, is part of the point: to be deliberate with time, generous with care, and attentive to the things that matter most—whether they’re tools, texts, or toddlers.

Craft, constraints, and time

As the conversation drifts from tools to time, Justin shares what motivates him now: “If I’ve got an hour at the end of the day, I want to spend it with my brushes and my pencils. I’d rather spend my time making things from the heart and the hand than generating them.”

He’s not trying to win the AI race. He’s trying to shape the tools that will last. “Someone has to figure out what these tools are actually good for. That’s what keeps me coming back.”

Additional resources

In the follow-on to Russ’s initial tweet, he shares the following resources:

Hugging Face LLM course: A platform for working with machine learning models

Karpathy’s GPT from scratch: A walk-through of training GPT-2

Axolotl: A tool for fine-tuning large language models

A designer’s guide to engaging with AI

Talk to a designer about AI and you’ll likely find them in one of two camps: enthusiastic early adopters, or principled abstainers opting out on ethical, environmental, or existential concerns. Some linger in the middle—curious but cautious. But most? They’ve picked a side.

Huge proponent of understanding AI as a design material. I think this makes every designer, researcher, and content person much better at asking the right questions, exploring within the right constraints, and being able to push (back) on ideas.